- Pixinsight core vs pixinsight new instance 64 Bit#

- Pixinsight core vs pixinsight new instance registration#

- Pixinsight core vs pixinsight new instance plus#

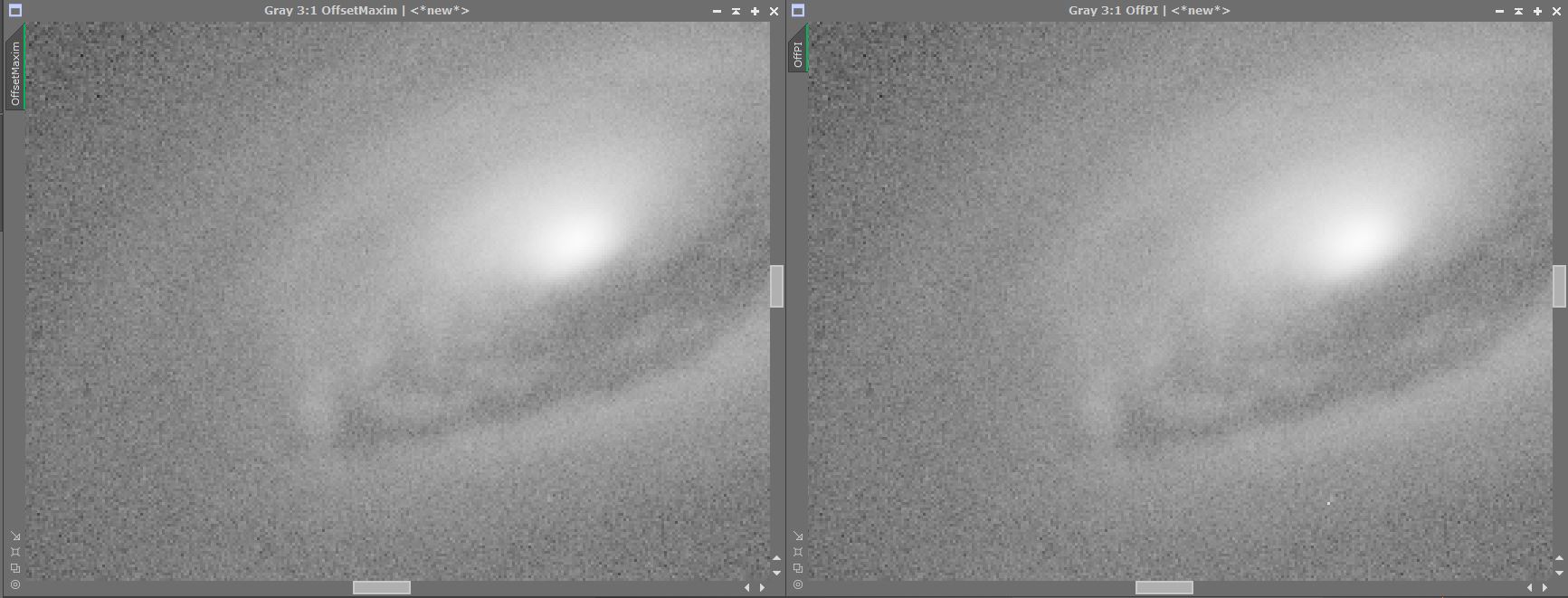

The HDRComposition tool has actually created a 64 bit float image (we can change that, but it's the default and as you should remember, we used the default values). Horror! The core of M42 is, again, saturated!! Didn't we just do the HDRComposition to avoid this very problem? Therefore, we will skip the deconvolution and just do a basic histogram stretch, so we can see what's in there: We can examine the noise with PixInsight in different ways, but in this case, a simple histogram stretch (or by using the ScreenTransferFunction) is already good enough to "see" it. Now, being this such marginal data, the SNR of this image doesn't grant for a successful deconvolution.

Regardless, being still a linear image, it appears really dark in our screen.

Of course, since we've integrated two linear images, our resulting image is also linear, which can be very handy if we would like to do some processes that work much better with linear images, such as deconvolution, etc. You could tweak the parameters, but really, the only things to adjust would be the parameters that define the mask creation: threshold, smoothness and growth, and as we shall see, the default values already work pretty well. Considering all we've done is to open the HDRComposition tool, feed it the files and click "Apply", that's pretty amazing!

With that done, we simply apply (click on the round blue sphere), and we're done creating the HDR composition. The first step then, once we have our set of images with different exposures nicely registered (just two in this example), is to add them to the list of Input Images: In fact, if you feed it linear images, it will also return a linear image - a very useful feature, as you can create the HDR composition and then start processing the image as if the already composed HDR image is what came out of your calibration steps. HDRComposition works really well with linear images. Now that we have our two master luminance images nicely aligned, let's get to the bottom of it.

Pixinsight core vs pixinsight new instance registration#

The calibration/stacking process can be done with the ImageCalibration and ImageIntegration modules in PixInsight, the registration can easily be done with the StarAlignment tool, and the gradient removal (don't forget to crop "bad" edges first, due to dithering or misalignment) with the DBE tool. Of course, before we can integrate the two luminance images, all the subframes for each image need to be registered/calibrated/stacked, and once we have the two master luminance images, we should remove gradients, and register them so they align nicely. I only spent about one hour capturing all the data (both luminance sets and all the RGB data) in the middle of one imaging session, just for the purpose of writing these articles. As I also mention in my last article, this is clearly NOT high quality data. The two luminance sets is where we'll be doing the HDR composition. RGB: 3x3 minutes each channel, binned 2x2 (27 minutes).Luminance: 6x5 minutes + 6x 30 seconds (33 minutes).

Pixinsight core vs pixinsight new instance plus#

The data is the same I used in my previous article, plus some color data to make it "pretty": As I anticipated in my previous article, I'm going to explain one easy way to generate an HDR composition with the HDRComposition tool in PixInsight.

0 kommentar(er)

0 kommentar(er)